views

YouTube has often been criticised for moderation of content on the platform, as well as the comments being posted by users. Recently, the video social network was criticised for the belated response to removing comments posted by paedophiles on videos featuring young girls. But this is massive. Yesterday, on April 2, an investigative report by Bloomberg came into light, quoting a host of present and previous YouTube employees that did not approve of the way it conducts its business. This is not surprising — for an entity as massive as YouTube, there are bound to be some that are left disgruntled. In this case, however, the situation is a bit different, and should be a grave, alarming point of concern for our entire society.

The issue at hand covers a whole bunch of topics — conspiracy theories, inaccurate data, socio-political and racial propaganda, brutally toxic content, and more. This raises the question of social responsibility, in turn being a bildungsroman to the entire internet-based society that we live in today. YouTube, after having lived through its initial (and middle) years as something similar to a glorified garage studio with enough panache to uphold the principles of free speech, is a pop culture icon today. It is where creators have the intellectual freedom to take flight on wars that humankind needs to wage together. Unfortunately, there is also an ugly, vile underbelly that seethingly pulsates behind YouTube’s highly advanced content recommendation and engagement algorithms.

What makes it worse, however, is that YouTube apparently knows what is wrong, and despite that, has not made any attempts to rectify the wrongdoings in fear of losing out on the most important metric of today — “engagement”.

What has gone wrong?

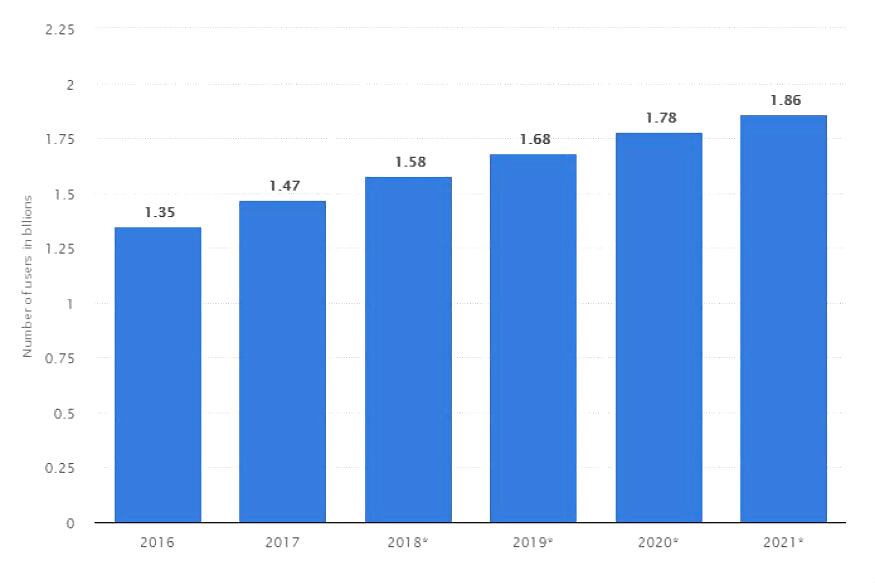

The issue in question is in line with what pretty much every social medium is facing — curtailing the vile aspects of the internet while maintaining engagement. The Bloomberg report mentioned above touches upon the barrage of toxic content that is on YouTube, and talks about the platform’s reluctance to take relevant action against it all. The problem gets bigger when you look at YouTube’s magnitude of users — social media management site Hootsuite’s data states that a staggering 1.9 billion logged-in users (that’s half the internet) visit YouTube every month, and the platform clocks over a billion hours of video consumption every day.

It is hardly breaking news that YouTube has an extensive list of videos uploaded by its massive number of users, talking about topics such as conspiracy theories, hate speech, inciteful messages, misinformation, and more disturbing content including racial, sexual, terrorist and other incendiary beats. However, one would assume that at YouTube, the company’s policy of what qualifies to be a fair video would naturally filter it all out. The problem is bigger, since YouTube has been repeatedly made aware of such content, and have themselves put in “measures” such as surveys at the end of videos to give their users the ability to flag down a piece of content.

Yet, these videos apparently fall under the grey area of just coming in within the borderline of what qualifies as fair content on YouTube. A separate report by Vice independently spotted that despite the devastating consequences of the Churchgate terror attack in New Zealand, neo-Nazi propaganda videos continue to thrive on YouTube and garner audience, buoyed by YouTube’s recommendation engine and machine learning algorithms. This is not new — racially abusive and discriminatory content from far-right and other obscure “broadcast publication” sources became a matter of grave contention during the last US elections — a matter that rival platform Facebook is still struggling to deal with, after facing allegations of its failure to curb propaganda despite having prior knowledge of such content.

YouTube’s recommendation engine basically ‘reads’ videos, extracting information from them via visual and language processing, powered by neural networks. It then sorts this information into categories, or information points, and then taps into its user base. The usage pattern of every user is studied to find out what each person is viewing, and the data points between videos are then matched to serve them with content similar to what they prefer to watch. To maximise the platform’s engagement potential, YouTube actively encourages users to make use of the autoplay feature, so that they can continue seeing videos similar to their preference.

This would be a good thing, if you were attempting to find out more details about, say, every villain in the Batman comics, or discover new post-rock music from the ‘90s. However, while it would not be beyond YouTube’s ability to filter the data points and flag (or outright ban) certain things so that such misinformation or hate-filled content is not spread on its platform, the company has failed to do so, despite being fully aware of it. The matter gets worse when one investigates further — Bloomberg’s report quotes data from extremism research firm Moonshot CVE to reveal that a significant number of channels that spread such vile content have reached over 170 million viewers, and the count does not appear to be stopping any time soon.

What is YouTube doing so far?

In response to the reports from Bloomberg and Vice, YouTube’s response has been notably inadequate. While many employees have quit after having disagreed over YouTube’s decision to milk the engagement potential of such videos, YouTube continues to host such content on its platform, since it technically does not infringe upon its content policies.

After having been informed of such videos on its platform, YouTube spokespersons have stated that the platform cancelled the ability of channels with such toxic content to monetise their work. The company has also disabled features such as the ability of these videos to be ‘liked’, or ‘commented upon’. Furthermore, they have also reportedly removed such content from YouTube’s recommendation engine, essentially flagging down the information points related to these videos.

These moves do have some impact — by restricting comments, YouTube is cutting down on an impromptu discussion forum for hatred mongering, crime, and racially and sexually abusive behaviour. Cutting down discourse, however, is not respite, since discussions can be easily shifted to another platform, and be continued there. This leaves YouTube as the information source for dubious content. Lastly, removal of such videos from the YouTube recommendation engine means the audience reach is automatically stifled, and such videos will (hopefully) be not recommended to unsuspecting users.

What is at stake?

However, this fails to deal with the biggest problem of the lot — discoverability. As long as these videos are on YouTube, a user with deliberate intention to look up on the same can choose to do so. This, is what makes this a matter of massive importance, since non-deletion of such toxic content is alarming, and leaves open the possibility of future terror, propaganda, fear, abuse and forceful influence being inflicted on the platform. That’s hardly a sunny day out for a company that echoes the Silicon Valley’s ideals of free speech, all in the name of engagement and additional revenue.

It remains to be seen if YouTube chooses to answer to this situation, and how this affects their overall stance. It is disheartening to see one of the world’s biggest internet culture icons being dragged into this situation, and calls for greater control and oversight on how the technology giants go about their everyday trade.

News18 has reached out independently to YouTube India, and will upload this story if it develops further.

Comments

0 comment