views

Singapore: Ultrasound - inaudible sound waves normally associated with cancer treatments and monitoring the unborn - may change the way we interact with our mobile devices.

Couple that with a different kind of wave - light, in the form of lasers - and we're edging towards a world of 3D, holographic displays hovering in the air that we can touch, feel and control.

UK start-up Ultrahaptics, for example, is working with premium car maker Jaguar Land Rover to create invisible air-based controls that drivers can feel and tweak. Instead of fumbling for the dashboard radio volume or temperature slider, and taking your eyes off the road, ultrasound waves would form the controls around your hand.

"You don't have to actually make it all the way to a surface, the controls find you in the middle of the air and let you operate them," says Tom Carter, co-founder and chief technology officer of Ultrahaptics.

Such technologies, proponents argue, are an advance on devices we can control via gesture - like Nintendo's Wii or Leap Motion's sensor device that allows users to control computers with hand gestures. That's because they mimic the tactile feel of real objects by firing pulses of inaudible sound to a spot in mid air.

They also move beyond the latest generation of tactile mobile interfaces, where companies such as Apple and Huawei are building more response into the cold glass of a mobile device screen.

Ultrasound promises to move interaction from the flat and physical to the three dimensional and air-bound. And that's just for starters.

By applying similar theories about waves to light, some companies hope to not only reproduce the feel of a mid-air interface, but to make it visible, too.

Japanese start-up Pixie Dust Technologies, for example, wants to match mid-air haptics with tiny lasers that create visible holograms of those controls. This would allow users to interact, say, with large sets of data in a 3D aerial interface.

"It would be like the movie 'Iron Man'," says Takayuki Hoshi, a co-founder, referencing a sequence in the film where the lead character played by Robert Downey Jr. projects holographic images and data in mid-air from his computer, which he is then able to manipulate by hand.

Broken Promises

Japan has long been at the forefront of this technology. Hiroyuki Shinoda, considered the father of mid-air haptics, said he first had the idea of an ultrasound tactile display in the 1990s and filed his first patent in 2001.

His team at the University of Tokyo is using ultrasound technology to allow people to remotely see, touch and interact with things or each other. For now, the distance between the two is limited by the use of mirrors, but one of its inventors, Keisuke Hasegawa, says this could eventually be converted to a signal, making it possible to interact whatever the distance.

For sure, promises of sci-fi interfaces have been broken before. And even the more modest parts of this technology are some way off. Lee Skrypchuk, Jaguar Land Rovers' Human Machine Interface Technical Specialist, said technology like Ultrahaptics' was still 5-7 years away from being in their cars.

And Hoshi, whose Pixie Dust has made promotional videos of people touching tiny mid-air sylphs, says the cost of components needs to fall further to make this technology commercially viable. "Our task for now is to tell the world about this technology," he says.

Pixie Dust is in the meantime also using ultrasound to form particles into mid-air shapes, so-called acoustic levitation, and speakers that direct sound to some people in a space and not others - useful in museums or at road crossings, says Hoshi.

From Kitchen To Car

But the holy grail remains a mid-air interface that combines touch and visuals.

Hoshi says touching his laser plasma sylphs feels like a tiny explosion on the fingertips, and would best be replaced by a more natural ultrasound technology.

And even laser technology itself is a work in progress.

Another Japanese company, Burton Inc, offers live outdoor demonstrations of mid-air laser displays fluttering like fireflies. But founder Hidei Kimura says he's still trying to interest local governments in using it to project signs that float in the sky alongside the country's usual loudspeaker alerts during a natural disaster.

Perhaps the biggest obstacle to commercializing mid-air interfaces is making a pitch that appeals not just to consumers' fantasies but to the customer's bottom line.

Norwegian start-up Elliptic Labs, for example, says the world's biggest smartphone and appliance manufacturers are interested in its mid-air gesture interface because it requires no special chip and removes the need for a phone's optical sensor.

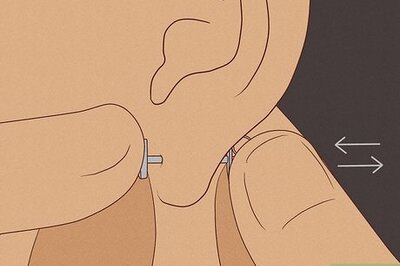

Elliptic CEO Laila Danielsen says her ultrasound technology uses existing microphones and speakers, allowing users to take a selfie, say, by waving at the screen.

Gesture interfaces, she concedes, are nothing new. Samsung Electronics had infra-red gesture sensors in its phones, but says "people didn't use it".

Danielsen says her technology is better because it's cheaper and broadens the field in which users can control their devices. Next stop, she says, is including touchless gestures into the kitchen, or cars.

Comments

0 comment